Yelp: A Story Of Building An Experimentation Program

“Facebook’s infrastructure helps you to abstract away all the complexity of experimentation to help you move faster. Whereas Yelp was about giving people more control, providing more detail, educating, and leaving it to people for interpretation”.

You might also enjoy these articles:

Introduction

When it comes to Product Management and Experimentation, Rohan Katyal has done it all.

Rohan is currently Product Manager at Facebook (Meta), working in the New Product Experimentation team on zero to one product development. He has also led messaging monetisation on Messenger and Instagram, growing Click to WhatsApp ads to over 1M monthly advertisers.

Rohan is best known for building and scaling the Yelp experimentation program. His approach to democratising experimentation at Yelp is the subject of a Harvard Business School case study.

Yelp is the US market-leading publisher of crowd-sourced reviews about businesses on their popular website and app. More than 250 million customer reviews have been listed, with 100+ million unique visitors across Yelp’s digital platforms. Yelp generates more than $1.3B in revenue per annum.

When it comes to embedding and scaling experimentation programs, Rohan knows what works. In this article, Rohan takes us on his personal journey of establishing the Yelp experimentation program, sharing key practical insights and learnings.

In this article we discuss:

Focus on people

Facebook vs Yelp

Metrics that matter

Learning, not shipping

The experimentation scorecard

Experimentation cultural transformation

1. Focus on people

It can be very easy to get caught up in experimentation technology and analysis.

The people side of experimentation is often overlooked. The people side of things is a huge part of how you experiment. You can have the best platform, systems, and processes in place, but if people don’t understand how to use them, all is lost. People will make bad decisions and interpret data and insights incorrectly.

Rohan suggests his most important learning from establishing the Yelp experimentation program is to not overlook the people side of the change process.

“I learned more about the people side of experimentation. Everything that I’ve learned, I’ve applied it afterwards - all the growth work I’ve done, all the experimentation work I’ve done. It ranks very highly. It completely broadened my perspective.”

Growth is a game of inches – you hypothesise, you test, you learn, and you iterate. People play a critical role in helping to build this muscle in an organisation.

It’s not about the tech, it’s about the people.

People are the key reason why your Growth or Experimentation program will succeed or fail.

2. Facebook vs Yelp

Rohan suggests that the experimentation practices at Facebook are very different to Yelp.

Fundamentally, both Facebook and Yelp follow the same scientific process of experimentation, however, that’s where the similarities ended.

EXPERIMENTATION AT YELP

At Yelp, the experimentation landscape was very different to Facebook.

It’s not that the organisation wasn’t experimenting, there was extensive experimentation. Experimentation at Yelp was just more immature.

Some of the early challenges with experimentation at Yelp included:

Tests being performed in silos

No cross-team experimentation intelligence

No experimentation guardrails

Subjective, people-led decision making

Lots of ad-hoc analysis

Hacked together infrastructure

Experimentation at Yelp was more people led and culture led. There was a lot of hand holding in the experimentation practice.

The Yelp approach was to establish cultural integration of experimentation and product management practices to help people understand meaning and context.

For instance, on an experimentation scorecard, what is a p-value? What does the p-value mean? How to you interpret the p-value?

“Facebook’s approach was different. We wanted to have a bias for moving fast. So, instead of educating, and having education programs, we’re going to create a tool that helps you interpret the data as well.”

At Yelp individual experiments were taking a long time to perform due to the manual nature of experimentation. Post-analysis was taking between 7-14 days.

A car crash was inevitably waiting around the corner.

Experimentation had become a blocker in the organisation. Product velocity was decreasing, rather than increasing.

“We wanted to be able to make decisions faster. We wanted to be able to ship faster. We wanted to be able to build a better product for our users and deliver more value for investors, and shareholders of the company.”

EXPERIMENTATION AT FACEBOOK

Switching gears, experimentation at Facebook is mature, industrialised, and highly sophisticated.

Facebook is exponentially more mature, given its scale, investment and 15 year head start on Yelp. At Facebook, all the tools, systems and process already exist, being very robust.

What does experimentation feel like at Facebook?

Centralised data governance

Experimentation office hours

Experimentation resource support

Experimentation guardrails

Centralised metrics / Product specific metrics

Standardisation of processes, systems, and communications

What’s interesting at Facebook?

The annotation is the same. Everyone speaks the same language when you look at the experimentation tool. Red is bad, green is good. The tool provides this information straight up.

If you want to dive deeper into the data and statistics, it’s all there under the hood in the tool. Otherwise, you can just run as fast as you like.

“Facebook’s infrastructure helps you to abstract away all the complexity of experimentation to help you move faster. Whereas Yelp was about giving people more control, providing more detail, educating, and leaving it to people for interpretation”.

3. Metrics that matter

Prior to implementation of the Bunsen Experimentation Platform at Yelp, there were multiple definitions of the same metric. This resulted in lots of confusion.

With Bunsen came centralisation of analysis and logging. The next logical step was to centralise metrics.

Standardisation of experimentation metrics at Yelp was a complicated process that took nearly nine months, producing a set of 19 core metrics.

Yelp landed on three categories of metrics:

Decision metrics (primary)

Tracking metrics (secondary)

Guardrail metrics (do no harm)

Decision metrics:

These are the metrics that you care about the most – also referred to as goal metrics. Decision metrics help you to understand if you should ship product, or not.

Tracking metrics:

These are the second order metrics that you care about. Tracking metrics aren’t primarily used to inform product decisions. However, you need to understand that your experiments aren’t negatively affecting these metrics.

Guardrail metrics:

These are the “do no harm” metrics. If an experiment exceeded the limits for a Guardrail metric it would trigger an alert, requiring review from business owners.

“If a Guardrail metric is hit, beyond the accepted range, key stakeholders would receive an email alert. If the experiment crossed the fully acceptable limit, the experiment was paused until business owners agreed to resume the experiment.”

The end game of the Yelp experimentation program was to increase product velocity. However, the experimentation guardrails added more friction due to the cross-team intelligence required to monitor experiments.

This seemed somewhat counterintuitive to Yelp’s experimentation objectives but was a trade-off that proved worthwhile.

“We saw a significant increase in the quality of decisions being made. It was a trade-off we were willing to make.”

If you’re enjoying this article, listen to the full conversation on the Experimentation Masters Podcast

4. Learning, not shipping

When not done correctly, experimentation is treated as a validation tool, not a learning tool. Experimentation is merely treated as a precursor to shipping product.

If you're focused on experimenting, as a precursor to shipping, you're not actually thinking about learning.

There are reasons for this:

Overconfidence in our product intuition

Overconfidence in the quality of our ideas

Being forced to experiment

Ego centric decision-making

Experimentation as a validation tool does not produce organisation learning - you’re not testing and refining your hypotheses and removing biases from decision-making processes.

Experimentation can help you understand if something's good. It also tells you that something's not good. You've tested it, it didn't work, you learn.

5. The experimentation scorecard

One of the big challenges at Yelp was that there wasn’t a common language for experimentation.

Every time an experiment was performed, and the results and outcomes written up, people would use different phrasing and terminology.

It was impossible for two people to have a discussion around experimentation with a shared understanding. This produced inefficiencies and misunderstanding.

There were two primary problems:

No common language for experimentation

Lack of trust in data analysis

A lot of time and effort was wasted interpreting data and information. Every time a presentation was conducted for the Executive Team, a new slide deck would need to be created.

For example, there was no shared understanding between Data teams of a standardised definition and measure for an Active User. Different teams were using different definitions.

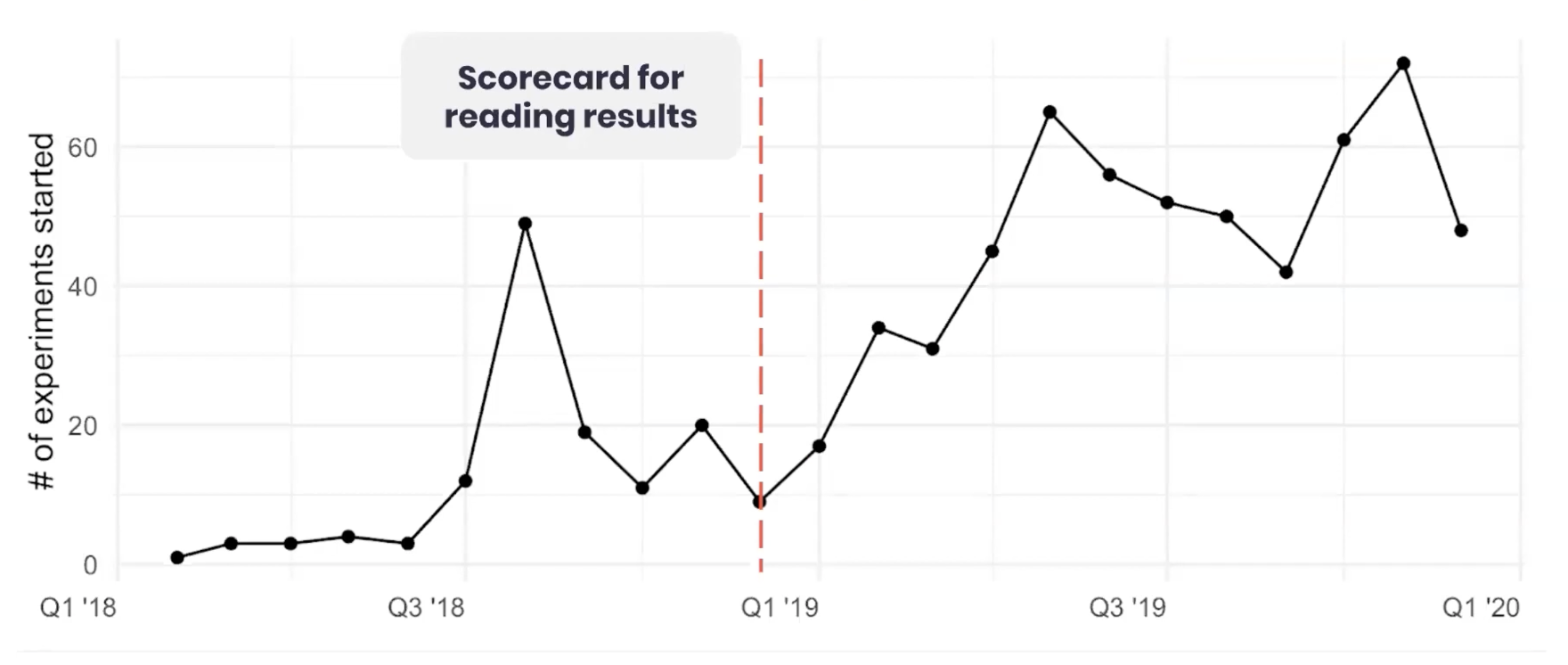

Introduction of the Yelp Experimentation Scorecard

Introduction of the Experimentation Scorecard changed all that.

Clearer and easier interpretation of results produced faster and more accurate decision-making. Experimentation velocity also increase exponentially.

“The Experimentation Scorecard enabled everyone to speak the same language about experimentation. It reduced the overhead needed to develop product. This became a strong forcing function to encourage people to transition onto the Bunsen experimentation platform”.

6. Experimentation cultural transformation

The Yelp experimentation cultural transformation focussed on three key areas:

Education

Standardisation

Monitoring and measurement

1. EDUCATION:

Educating the Yelp business on experimentation was a big task given the size of the organisation. To get ahead of the curve, experimentation education was commenced in parallel with experimentation standardisation.

To level up education, Yelp got buy-in from all the key teams.

(A). Experimentation Guide

The Data Science team developed an Experimentation Guide that was mandated from the VP’s. Everyone had to complete the Experimentation Guide.

There was a short 10 question quiz Data Science developed that needed to be completed too.

(B). PM Training

Data Science initiated that all current Yelp PM’s had to pass experimentation training.

When new PM’s joined the company, experimentation training became part of the new starter on boarding experience.

This helped get people up to speed with experimentation practices at Yelp, also providing a basic understanding of Statistics.

(C). Bunsen Deputies

Yelp also wanted to create Bunsen Deputies (evangelists) across different parts of the company. To do that, the team developed a four-week training program, to train people to become Bunsen Deputies.

The Bunsen Deputies could address any Bunsen problems or questions, being the first touch point in their own teams. This helped to embed experimentation advocates into key product teams, further driving widespread adoption of the Bunsen experimentation platform.

2. STANDARDISATION:

The team aimed to standardise the end-to-end experimentation process to ensure consistency, repeatability, and efficiency.

Yelp standardised the experimentation process on four levels:

How people test

How experiments are created

How results are interpreted

How experiments are communicated

“If different people don't speak the same language, you will not be able to scale experimentation in a business.”

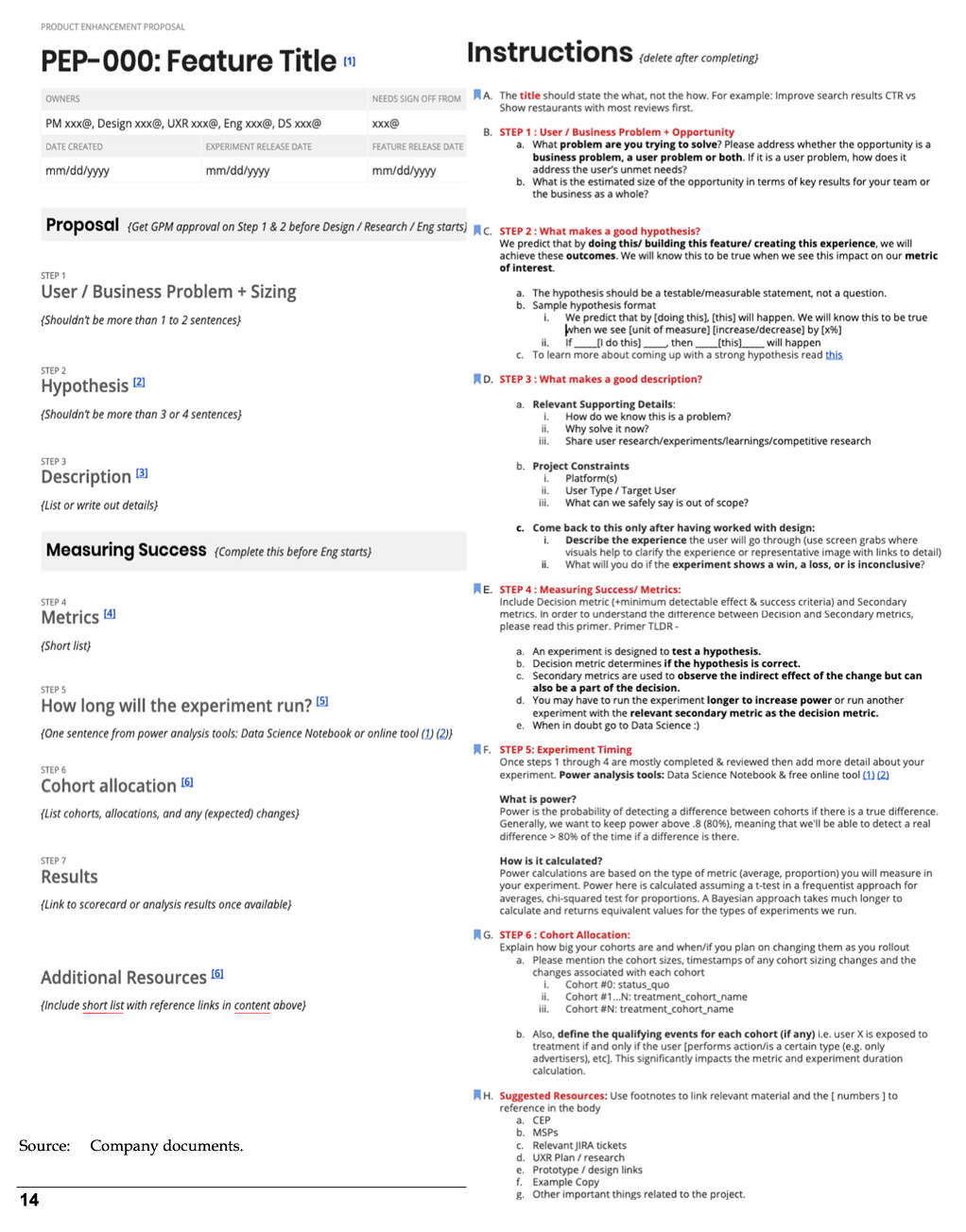

(A). The Product Enhancement Proposal (PEP)

Before an experiment was planned, or executed, a PEP was required to be completed.

Essentially, the PEP was a template to guide experimentation documentation – what is the business/user problem, what is the hypothesis, what are key metrics etc.

The PEP template ensured a consistent conversation around experimentation design in the business.

Yelp experimentation Product Enhancement Proposal (PEP)

3. MONITORING & MEASUREMENT:

Better measurement and monitoring of experiments were facilitated by the Experimentation Scorecard. Now, Yelp was talking about experimentation in the same way.

The questioned remained, how do we measure the success of the experimentation program?

To measure and monitor the efficacy of the experimentation program Yelp started conducting regular meta-analysis of past experiments.

Yelp was seeking to determine the proportion of experiments that were correctly designed and executed. This was initially conducted as a one-time exercise.

Eventually, this became the North Star of the Yelp experimentation program, with the team performing a meta-analysis every quarter, and reporting it to the Executive Leadership Team.

“Over the course of the first one and a half years, we were able to improve the proportion of correct decisions, the decision accuracy metric that we used, over two times. We a 120% improvement in correct decisions being made at Yelp”.

Need help with your next experiment?

Whether you’ve never run an experiment before, or you’ve run hundreds, I’m passionate about coaching people to run more effective experiments.

Are you struggling with experimentation in any way?

Let’s talk, and I’ll help you.

References:

Before you finish...

Did you find this article helpful? Before you go, it would be great if you could help us by completing the actions below.

By joining the First Principles community, you’ll never miss an article.

Help make our longform articles stronger. It only takes a minute to complete the NPS survey for this article. Your feedback helps me make each article better.

Or share this article by simply copy and pasting the URL from your browser.