Resources

Links

If you want to be the best, you need to learn from the best in the world.

I’ve curated a list of learning materials that have been recommended to me by world-leading experts in Experimentation, Innovation and Product Design from the Experimentation Masters Podcast.

-

Experimentation at Spotify: Three Lessons for Maximizing Impact in Innovation

-

Spotify’s New Experimentation Platform (Part 1)

-

Spotify’s New Experimentation Platform (Part 2)

-

Confidence — An Experimentation Platform from Spotify

-

A/B Tests: Two Important Uncommon Topics: Trust & OEC

-

Adopting Evidence-Guided Development in Your Org

-

M&S CEO blames new website’s “settling in” period for 8.1% online sales drop

-

Major Redesigns Usually Fail

-

How We Lost (and found) Millions by Not A/B Testing

-

Can I A/B Test That?

-

A/B Testing 101

-

AI and the Automation of Work

-

Experimentation in Customer Advocacy, Relationship & Engagement Teams

-

Detecting Interaction Effects in Online Experimentation

-

Interaction Effects in Online Experimentation

-

Avoiding Interaction Effects in Online Experimentation

-

A/B Interactions: A Call to Relax

-

Experimentation at Spotify: Three Lessons for Maximising Impact in Innovation

-

How to Validate Your B2B Startup Idea

-

Netflix Tech Blog - Experimentation

-

Experiments at Airbnb

-

Amazon - Experimentation

-

Fast Company - Change or Die

-

Good Experiment, Bad Experiment

-

Towards Data Science

-

Lyft - Experimentation in a Ridesharing Marketplace (Part 1) - Interference Across a Network

-

Lyft - Experimentation in a Ridesharing Marketplace (Part 2) - Simulating a Ridesharing Marketplace

-

Lyft - Experimentation in a Ridesharing Marketplace (Part 3) - Bias and Variance

-

Blog - Lyft Engineering

-

Growth Blog - John Egan

-

Blog - Evan Miller

-

Improving Duolingo One Experiment at a Time

-

How Duolingo Runs Experiments at Scale

-

The Tenets of A/B Testing From Duolingo's Master Growth Hacker

-

Blog - Eppo

-

Blog - Optimizely

-

Statsig - Experimentation Virtual Meetup - AMA With Ronny Kohavi

-

Building Products at Facebook

-

Blog - Strava Engineering

-

An Introduction to Communities of Practice

-

Cultivating Communities of Practice: A Guide to Managing Knowledge - Seven Principles for Cultivating Communities of Practice

-

How Optimizely (Almost) Got Me Fired

-

Microsoft Experimentation Platform

-

16 PLG Leaders on What Separates Good From Great Companies When it Comes to Experimentation

-

It Takes a Flywheel to Fly: Kickstarting and Keeping the A/B Testing Momentum

-

Spotify - Choosing a Sequential Testing Framework - Comparisons and Discussions

-

Blog - Vista Data and Analytics

-

Building a Culture of Experimentation

-

Organising for Scaled Experimentation

-

Automated Sample Ratio Mismatch (SRM) Detection and Analysis

-

Time-Split Testing for Pricing Optimisation at Scale

-

The Negative Test

-

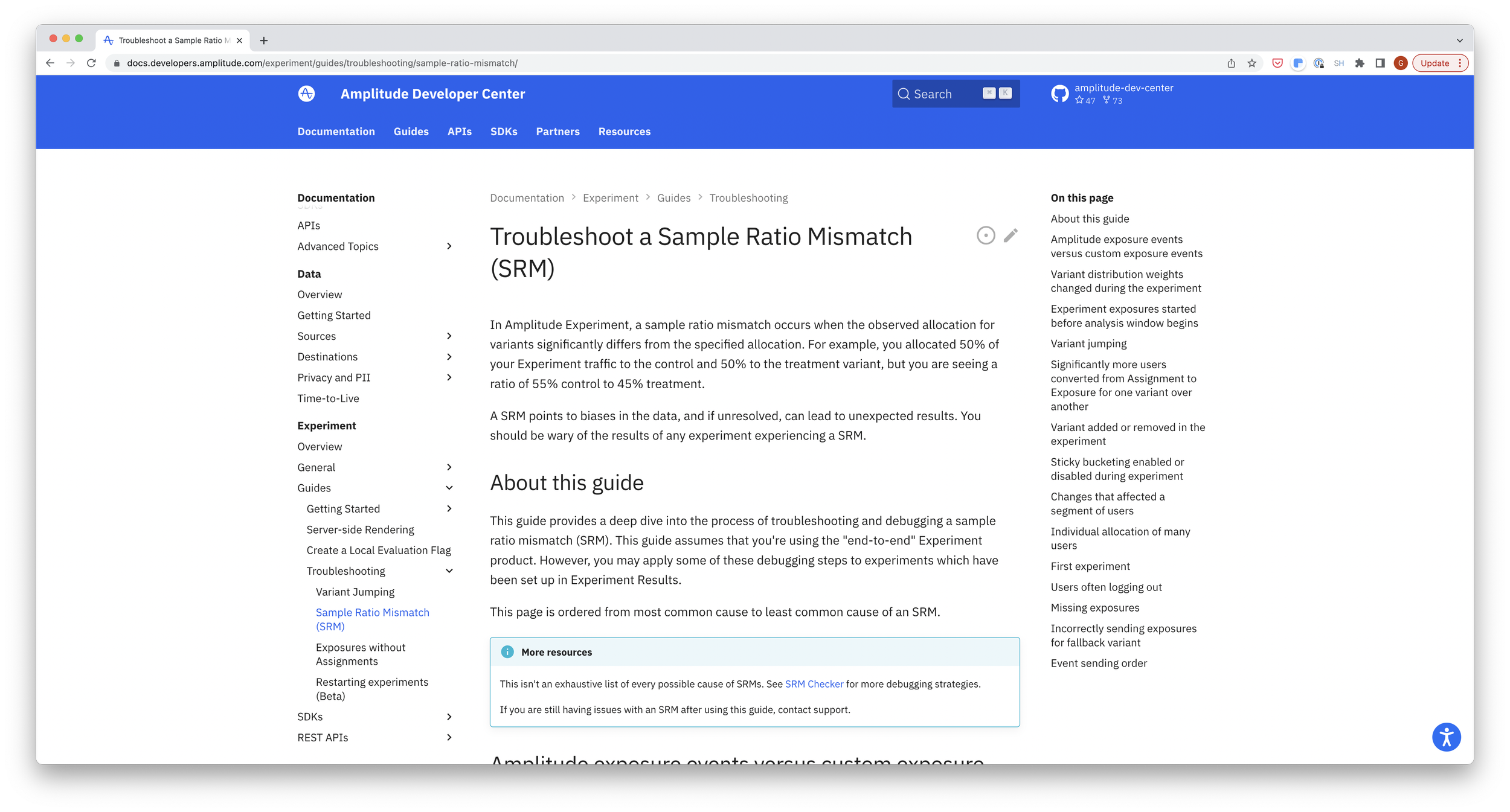

Amplitude - Troubleshoot a Sample Mismatch Ratio (SRM)

-

Peeking, Sequential Testing and Interim Analyses in A/B Testing

-

Statistical Significance Clearly Explained

-

Experimentation Metrics: Deciding What to Measure

-

What Should the Primary Metric be for Experimentation Platforms?

-

Autopsy of a Failed Growth Hack

-

The Wrong Way to Analyse Experiments

-

Evan Miller - Sample Size Calculator

-

Input vs Output Metrics in Experimentation: How to Decide What to Measure

-

15 Important Product Metrics You Should Be Tracking

-

What's the Purpose of a Growth Team?

-

Statistical Significance on a Shoestring Budget

-

Lukas Vermeer - How to Run Many Tests at Once: Interaction Avoidance & Detection

-

Netflix Technology Blog

-

10 Lessons From Building an Experimentation Platform

-

Supercharging A/B Testing at Uber

-

Creating Communities of Practice

-

Twyman's Law and Controlled Experiments

-

GoodUI.org

-

Narrative Not PowerPoint

-

From 10s to 1000s: How to Scale Experimentation Velocity

-

Sample Ratio Mismatch (SRM) with Lukas Vermeer

-

SRM Checker

-

Why We Use Experimentation Quality as The Main KPI For Our Experimentation Platform

-

Experimentation in The Modern Digital Firm

-

How Experimentation Helps You Build Better Travel Digital Products

-

It Takes a Flywheel to Fly

-

How to Correctly Calculate Sample Size in A/B Testing

-

Get More Wins: Experimentation Metrics For Program Success

-

Enabling Experimentation at Your Organisation: Determining Your Team Structure

-

Booking.com Datascience

-

Engineers @ Optimizely

-

How to Build and Structure a Conversion Optimisation Team

-

Vishal Kapoor: Product Experimentation - From Zero to One

-

Interference, Bias, and Variance in Two-Sided Marketplace Experimentation: Guidance for Platforms

-

eBay - The Design of A/B Tests in an Online Marketplace

-

Ton Wesseling - When Experimentation Starts as a Solution to Raise ROI

-

The Wheel of Experimentation

-

How Much Product Discovery is Enough?

-

Reforge 1 Hour Sprint Retrospective

-

LinkedIn Ran Undisclosed Social Experiments on 20 Million Users For Years To Study Job Success

-

How Airbnb Safeguards Changes in Production

-

Addressing The Challenges of Product Discovery

-

Addressing The Challenges of Product Discovery - Q&A Edition

-

Optimize To Be Wrong, Not Right

-

A Dozen Things I’ve Learned From Nassim Taleb About Optionality/Investing

-

How to Correctly Calculate Sample Size in A/B Testing

-

Finally! Statistical significance clearly explained

-

Growth Loops Are The New Funnels

-

How Many Tests Can We Run?

-

Sample A/B Experiment For Strava

-

One on One's With Executives

-

Personalizing UX: Why Zillow Group Moved Beyond AB Testing

-

How Did Tropicana Lose $30 Million in a Packaging Redesign?

-

You're Probably Using NPS Wrong

-

Experimentation And Failure Fuel Innovation, So Let’s Give Each Other More Time

-

Act Like a Scientist

-

A Conversation with Mark Zuckerberg, Patrick Collison and Tyler Cowen

-

Ken Norton Blog - Bring The Donuts

-

Efficient A/B Testing With The AGILE Statistical Method

-

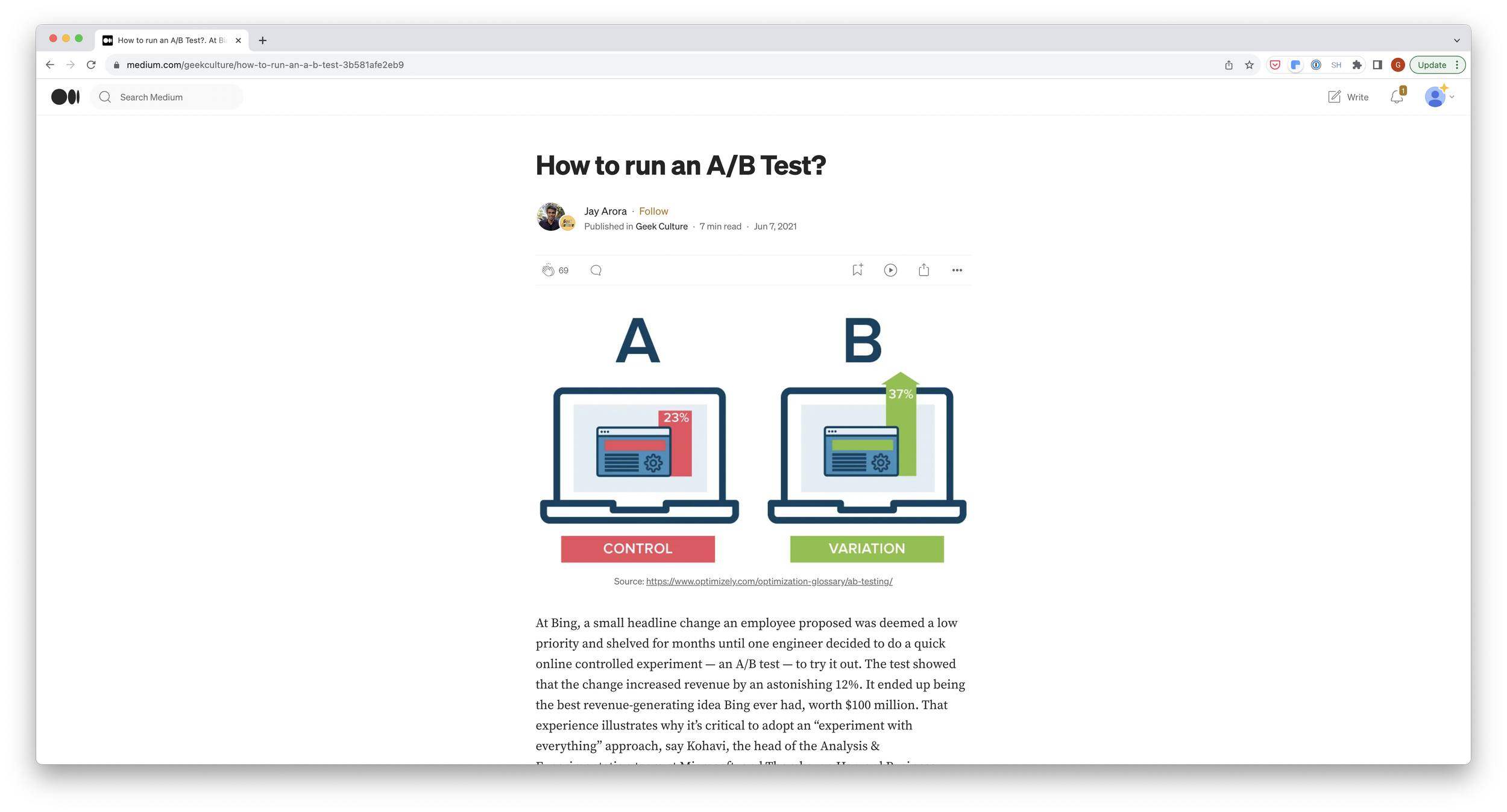

How To Run an A/B Test?

-

What Is Business Experimentation

-

How To Build An Experimentation Team

-

How To Setup Hypotheses

-

Description goes here

Description goes here -

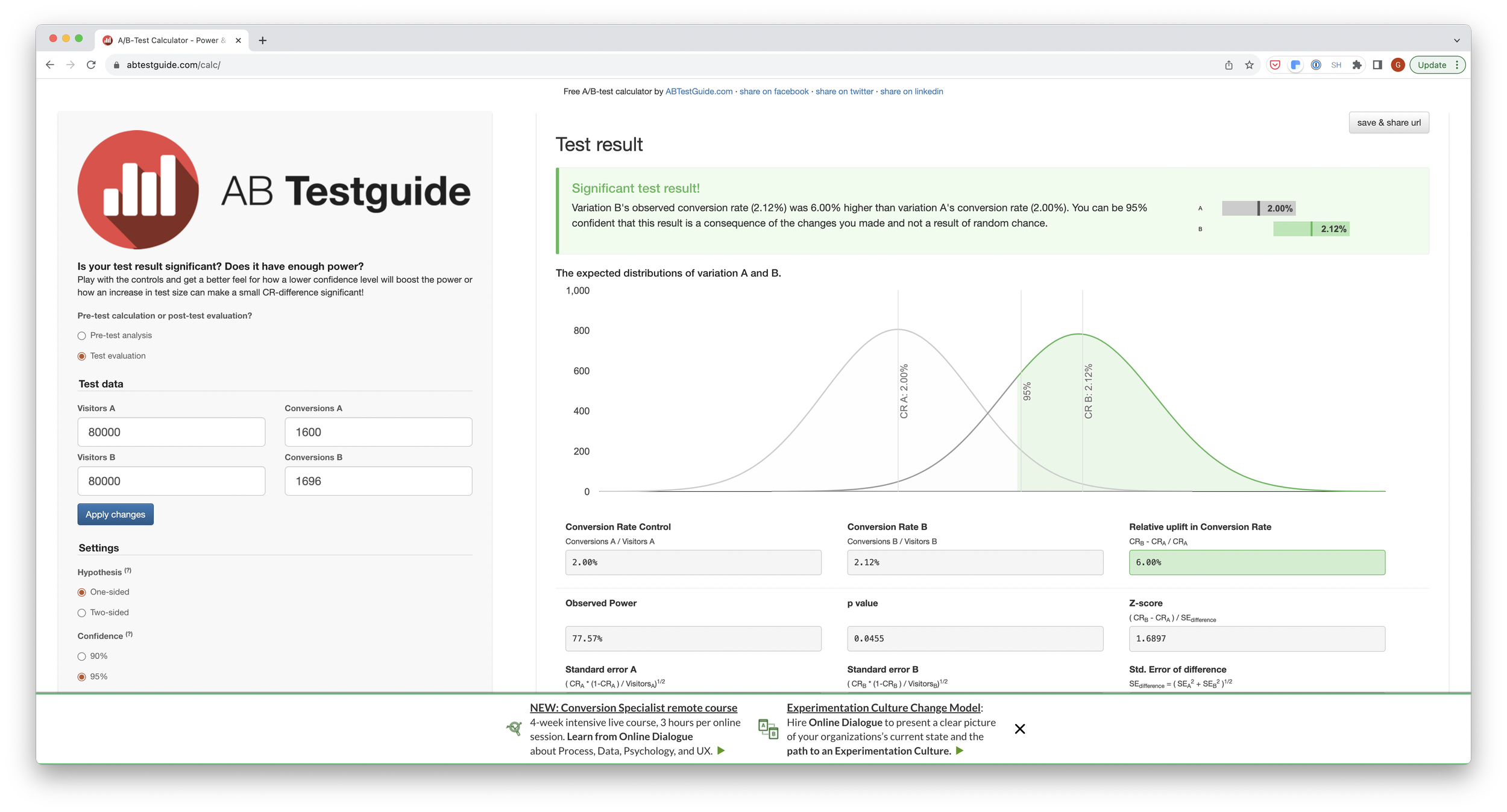

A/B Test Guide

-

Stop Micromanaging Product Strategy

-

Please, Please Don't A/B Test That

-

Scaling AirBnB's Experimentation Platform

-

Why Business Schools Need To Teach Experimentation

-

How Do A/B Tests Work?

-

Building Our Centralised Experimentation Platform

-

Reimagining Experimentation Analysis at Netflix

-

How We Scaled Experimentation at Hulu

-

Supporting Rapid Product Iteration with an Experimentation Analysis Platform

-

How We Reimagined A/B Testing at Squarespace

-

Modern Experimentation Platforms - How Seamless End-to-End Experimentation Workflows Supercharge Product Development

-

Democratising Online Controlled Experiments at Booking.com by Lukas Vermeer

-

Building a Culture of Experimentation

-

Decision-Making at Netflix

-

What is an A/B Test?

-

Interpreting A/B Test Results: False Positives and Statistical Significance

-

Interpreting A/B Test Results: False Negatives and Power

-

Building Confidence in a Decision

-

Experimentation is a Major Focus of Data Science Across Netflix

-

Netflix: A Culture of Learning

-

The Experimentation Culture at HelloFresh

-

How Etsy Handles Peeking in A/B Testing

-

Peeking Problem – The Fatal Mistake in A/B Testing and Experimentation

-

Multi-Armed Bandits And The Stitch Fix Experimentation Platform

-

There’s More To Experimentation Than A/B

-

Multi-Armed Bandit (MAB) – A/B Testing Sans Regret

-

Quasi Experimentation at Netflix

-

Key Challenges with Quasi Experiments at Netflix

-

How to Use Quasi-experiments and Counterfactuals to Build Great Products

-

Susan Athey - Stanford University - Counterfactual Inference

-

Switchback Tests and Randomized Experimentation Under Network Effects at DoorDash

-

Analyzing Switchback Experiments by Cluster Robust Standard Error to Prevent False Positive Results

-

Experiment Rigor for Switchback Experiment Analysis

-

Why It Matters Where You Randomize Users in A/B Experiments

-

How Not To Run an A/B Test

-

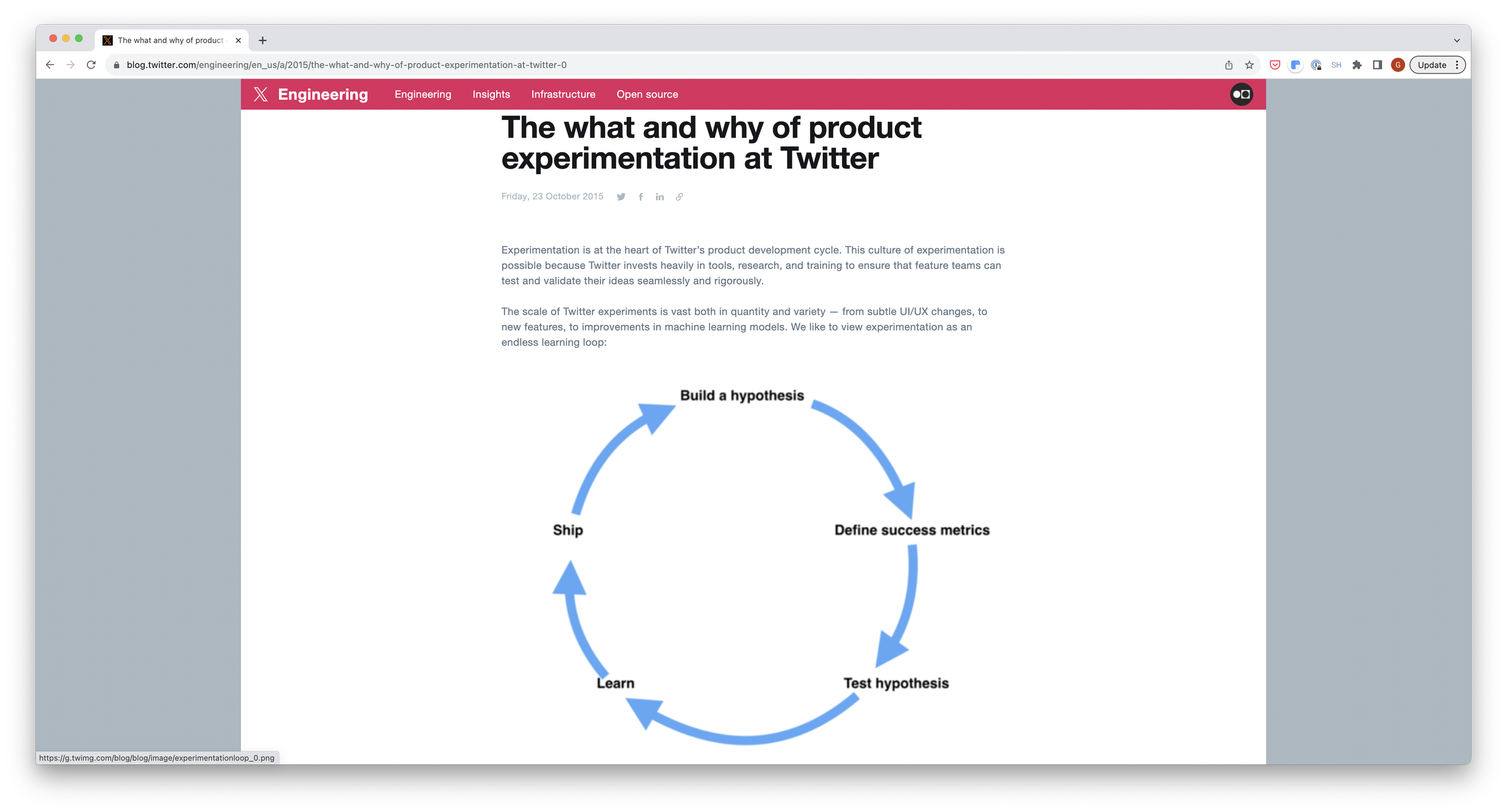

The What And Why of Product Experimentation at Twitter

-

Year 1 of an Experimentation Team: Challenges, Achievements & Learnings

-

Patterns of Trustworthy Experimentation: Pre-Experiment Stage

-

Leaky Abstractions In Online Experimentation Platforms

-

How Booking.com Increases The Power of Online Experiments With CUPED

-

How To Speed Up Your A/B Test

-

Improving Experimental Power through Control Using Predictions as Covariate (CUPAC)

-

Increasing The Sensitivity of A/B Tests By Utilizing The Variance Estimates of Experimental Units

-

Improving Online Experiment Capacity By 4X With Parallelization and Increased Sensitivity

-

The 4 Principles DoorDash Used to Increase Its Logistics Experiment Capacity by 1000%

-

How To Double A/B Testing Speed With CUPED

-

Reducing A/B Test Measurement Variance By 30%+

-

The Experimentation Gap

-

Behold, the Product Management Prioritization Menagerie

-

How We Rearchitected Mobile A/B Testing at The New York Times

-

The Surprising Power of Online Experiments

-

4 Principles for Making Experimentation Count

-

Guidelines for A/B Testing - 12 Guidelines to Help You Run More Effective, Trustworthy A/B Tests.

-

How Not to Run an A/B Test

-

Chasing Statistical Ghosts in Experimentation

-

The First Ghost of Experimentation: It’s Either Significant or Noise

-

The Second Ghost of Experimentation: The fallacy of Session Based Metrics

-

The Third Ghost of Experimentation: Multiple Comparisons

-

The Fourth Ghost of Experimentation: Peeking